It is really starting to feel like spring here in New Hampshire, so I've been reviving my Robomagellan robot:

|

| The current robot (not shown: Intel D435 sensor) |

Robomagellan was created by the Seattle Robotics Society and is a small scale autonomous vehicle race in which robots navigate between predefined start and finish points. The start and finish points are usually represented as GPS coordinates and marked by orange traffic cones. In most versions of the competition there are also optional waypoints that the robot can navigate to in order to earn bonus points. The race is usually conducted on mixed pedestrian terrain which can include obstacles such as park benches, curbs, trees, bushes, hills, people, etc..Unfortunately, there are not many Robomagellan contests happening anymore - but this platform is still good for me to work on some outdoor navigation. I actually started building this robot in 2012 when Robomagellan was quite popular. The robot was briefly worked on in 2014 and 2018. The GitHub contributions view seems to tell this story quite well:

|

| Contribution timeline for Robomagellan |

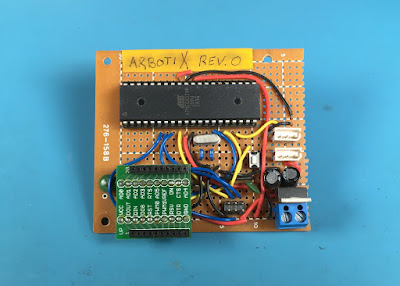

- The computer was originally a Turtlebot laptop. It has since been swapped out for an Intel NUC. I've previously posted about how I power the NUC off a 12->19V step up.

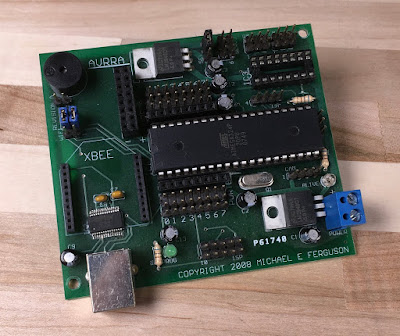

- The original version of the Etherbotix was designed for this robot. It now uses the later Etherbotix design with a plug-in motor driver.

- The robot now has an Adafruit Ultimate GPS v3. That may change in the near future, as I've been looking at setting up an RTK solution here on the farm.

- The robot originally used a small chip-level IMU on the Etherbotix, but now uses a UM-7 for better results. That said, I never had any luck with the internal UM-7 EKF (even when trying to calibrate it), so there are probably plenty of cheaper options out there.

- Originally, the main sensor was going to be a UTM-30 on a tilting servo. I've now simplified that for an Intel D435 depth sensor.

- The robot is still using the original wheels, however I switched from 100:1 gearboxes to 50:1 to get more speed (the 100:1 were really too torque, the robot literally could climb a wall).

The robot, as you probably guessed, runs ROS. Specifically I'm using the following packages:

- etherbotix_python - this are my drivers for the Etherbotix board. In addition to controlling the motors and providing odometry, this board also acts as a serial->ethernet adapter for the GPS module. The drivers publish the raw NMEA sentences that are sent by the GPS into ROS.

- um7 - this is the driver for the UM7 IMU.

- nmea_navsat_driver - this is used to convert NMEA sentences into a sensor_msgs/NavSatFix message.

- imu_transformer - is used to translate the IMU position into the base_link frame. My IMU is actually mounted "upside down" so this is super important.

- imu_filter_madgwick - this is used to track the pose of the IMU. Importantly it fuses the magnetometer information, allowing the IMU to act like a compass for the global EKF.

- robot_localization - I use two instances of the EKF filter. The first fuses the IMU with the wheel odometry in order to get a good local odometry frame. The second fuses the IMU, wheel odometry and GPS (processed by the navsat_transform_node) into a global odometry.

- rviz_satellite - not used on the robot, but is an awesome plugin for RVIZ that can download

Setting up the global localization took me a little while to get working. In order to make this process easier, I setup my main launch file so that I have an "offline_mode" argument which doesn't launch the drivers. Then I have a launch file for recording bagfiles running only the drivers. I can then change everything in my various pipelines when re-running the bagfiles locally. This has been quite useful as I've been tweaking the IMU processing pipeline in parallel with adding the global EKF.

Satellite Imagery in RVIZ

Visualization is always a powerful tool. While RVIZ doesn't have much going for outdoor robots out of the box, the rviz_satellite plugin makes it awesome.

|

| rviz_satellite overlay with some odometry tracks |

The one challenging part of rviz_satellite is setting the "Object URI". For an off-road robot, the default OpenStreetMaps don't do much. I ended up using MapBox satellite imagery - but getting the Object URI right took a bit of digging around. It turns out the correct URI is:

https://api.mapbox.com/styles/v1/mapbox/satellite-v9/tiles/256/{z}/{x}/{y}?access_token=XYZ

Also, free accounts with MapBox are limited to 200k tile requests per month. To avoid using these up, you might want to think about running a separate roscore so you can keep RVIZ running even when you restart the robot launch file. That said, I've only used 148 tile requests this month and have been restarting RVIZ quite a bit.

Next Steps

I just recently got the global localization working - I'm probably going to continue to tweak things. The D435 drivers are working pretty reliably now, so the next step is mount the D435 on the robot and start integrating the data and move onto some basic navigation. I also plan to clean up the IMU calibration code I created and get it merged into robot_calibration.